OpenAI has announced the release of its new model, OpenAI o3-mini, which is positioned as the latest, most cost-efficient addition to its reasoning series. Available in both ChatGPT and the API today, OpenAI o3-mini has already been previewed in December 2024, marking a significant step forward in the capabilities of small models. With exceptional performance in STEM fields—particularly science, math, and coding—this model maintains the low cost and reduced latency that users experienced with OpenAI o1-mini.

This new model stands out as OpenAI’s first small reasoning model to include highly sought-after features designed for developers. These include function calling, structured outputs, and developer messages, making the model immediately ready for production use. Just like its predecessors, OpenAI o1-mini and o1-preview, o3-mini supports streaming, offering developers more flexibility. In addition, the model provides three distinct levels of reasoning effort: low, medium, and high. This flexibility empowers developers to choose the best option depending on their use case. For example, when tackling complex tasks, developers can opt for the “high” setting to achieve better reasoning accuracy, while for tasks where speed is more important than depth, the “low” setting is ideal.

However, it’s important to note that OpenAI o3-mini does not support vision capabilities, so users requiring visual reasoning tasks will need to continue using OpenAI o1 for those specific needs. OpenAI o3-mini is now rolling out in the Chat Completions API, Assistants API, and Batch API, which will be available to select developers in API usage tiers 3-5. This means that access to this advanced model is being extended to a broader audience, with the expectation of even more developers benefiting from it in the near future.

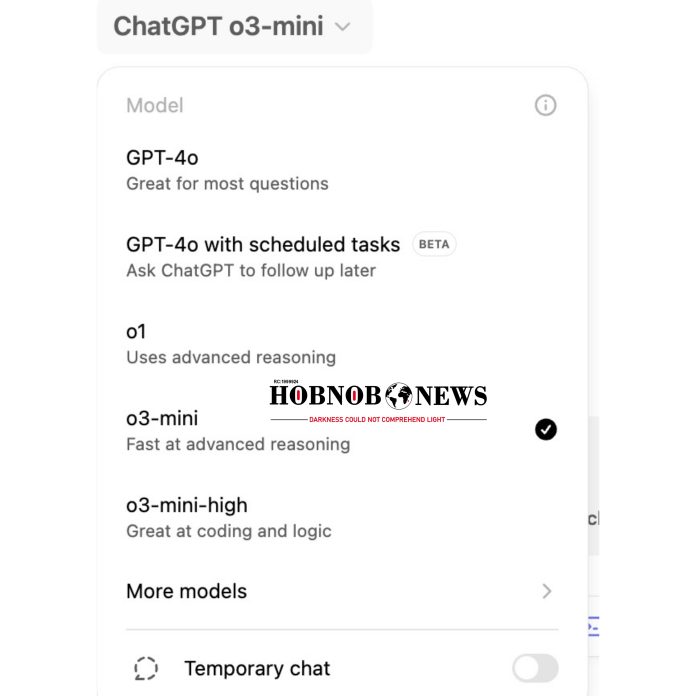

For ChatGPT users, OpenAI o3-mini is already available today for Plus, Team, and Pro users, with Enterprise-level access coming in about a week. This model will replace OpenAI o1-mini in the model picker, offering higher rate limits and lower latency. The upgrade is particularly beneficial for those involved in coding, STEM, and logical problem-solving tasks. As part of this release, OpenAI is tripling the rate limit for Plus and Team users—from 50 messages per day with o1-mini to 150 messages per day with o3-mini—giving them more capacity to engage with the model.

Another noteworthy feature is o3-mini’s integration with search, allowing it to provide up-to-date answers, complete with links to relevant web sources. Although this is still an early prototype, it represents a step toward a broader integration of search capabilities across OpenAI’s reasoning models, improving the quality and relevance of responses. This feature will enhance the value of the model, particularly for users looking for the most current information available.

For the first time, free plan users can also try OpenAI o3-mini, either by selecting the “Reason” option in the message composer or by regenerating a response. This marks a significant milestone, as it’s the first time a reasoning model has been made available to free users in ChatGPT. It gives non-paying users a taste of the powerful capabilities available in OpenAI’s reasoning models, allowing them to experience firsthand the precision and speed that OpenAI o3-mini offers.

While OpenAI o1 continues to serve as the broader general knowledge reasoning model, OpenAI o3-mini offers a specialized solution for technical domains that demand higher accuracy and speed. In ChatGPT, o3-mini operates at a medium reasoning effort level, striking a balance between quick responses and precision. For those who need a higher-intelligence version, all paid users can opt for o3-mini-high, which takes a little longer to generate responses but delivers even more accurate and sophisticated reasoning. Pro users will have unlimited access to both versions, offering flexibility and adaptability depending on their needs.

With OpenAI o3-mini, the company has made significant strides in offering advanced technical capabilities while maintaining affordability and speed. The introduction of this model positions OpenAI to meet the growing demand for specialized, cost-efficient models that excel in technical reasoning, providing a robust solution for developers and users across various fields.